xAI introduced Grok-1.5V, its first multimodal model that now processes images as well

xAI

Elon Musk's startup xAI has announced the release of its first multimodal model called Grok-1.5 Vision, or Grok-1.5V. Unlike previous versions, this model not only understands text, but is also capable of processing visual content including documents, charts, graphs, screenshots and photos.

Here's What We Know

According to xAI, Grok-1.5V competes with advanced multimodal models in various domains such as interdisciplinary reasoning and document understanding. The company showed seven examples demonstrating the model's capabilities, from converting an outline into code to creating a fairy tale from a child's drawing.

Comparing the performance of xAI's Grok-1.5V with similar models

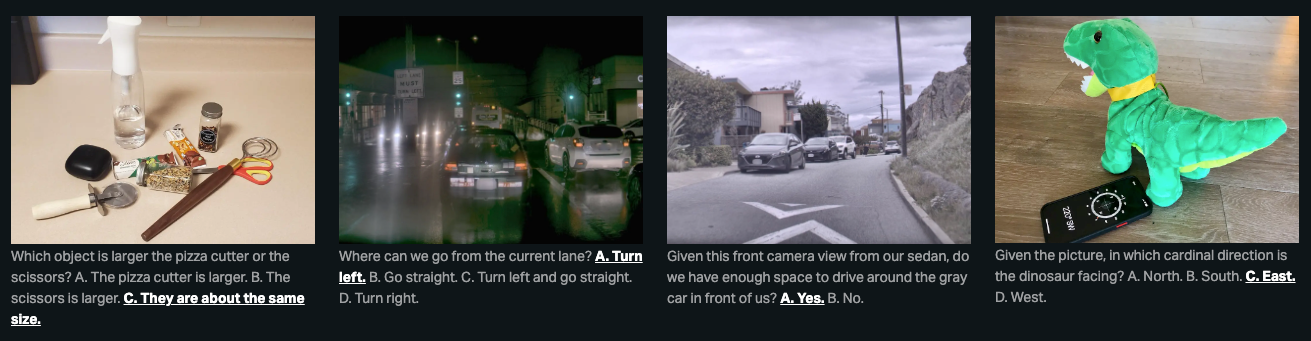

Testing the Grok-1.5V against peers like the GPT-4V and Claude 3, xAI claims that its multimodal model outperforms the competition, especially in the new RealWorldQA benchmark, designed to assess understanding of the real spatial world.

Grok-1.5V results in the RealWorldQA benchmark

The release of Grok-1.5V followed shortly after the open source release of the Grok chatbot, unveiled by xAI in November 2023. Ilon Musk's company continues to improve its AI development to compete with market leaders like OpenAI. That being said, Grok has previously run into issues with teaching users to engage in illegal behaviour.

In the coming months, xAI promises to make "significant" updates to Grok AI's multimodal understanding and information generation features.

Source: VentureBeat