Latest news

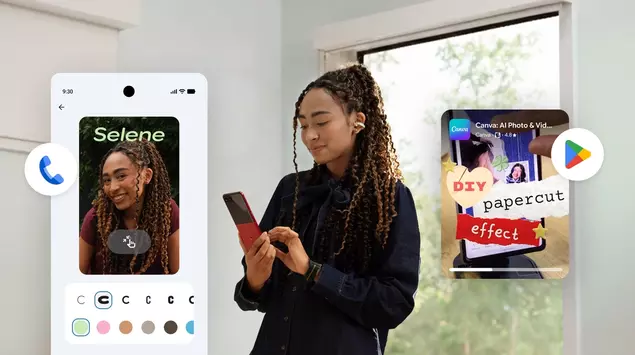

Gadgets

Gadget reviews

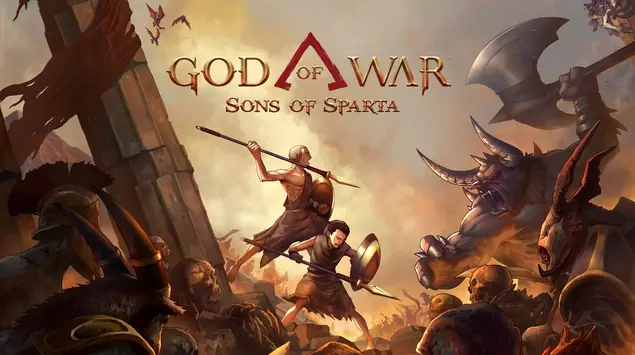

Games

Game reviews

Tech

Movies

Questions

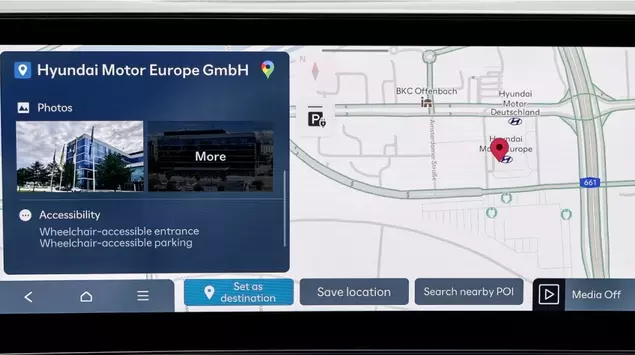

Cars

Military

Software