Apple removes artificial intelligence apps for nude images

Apple has decided to remove apps that use artificial intelligence to create nude images from its App Store. This decision was made in response to increased concerns about the privacy and ethics of such apps.

Here's What We Know

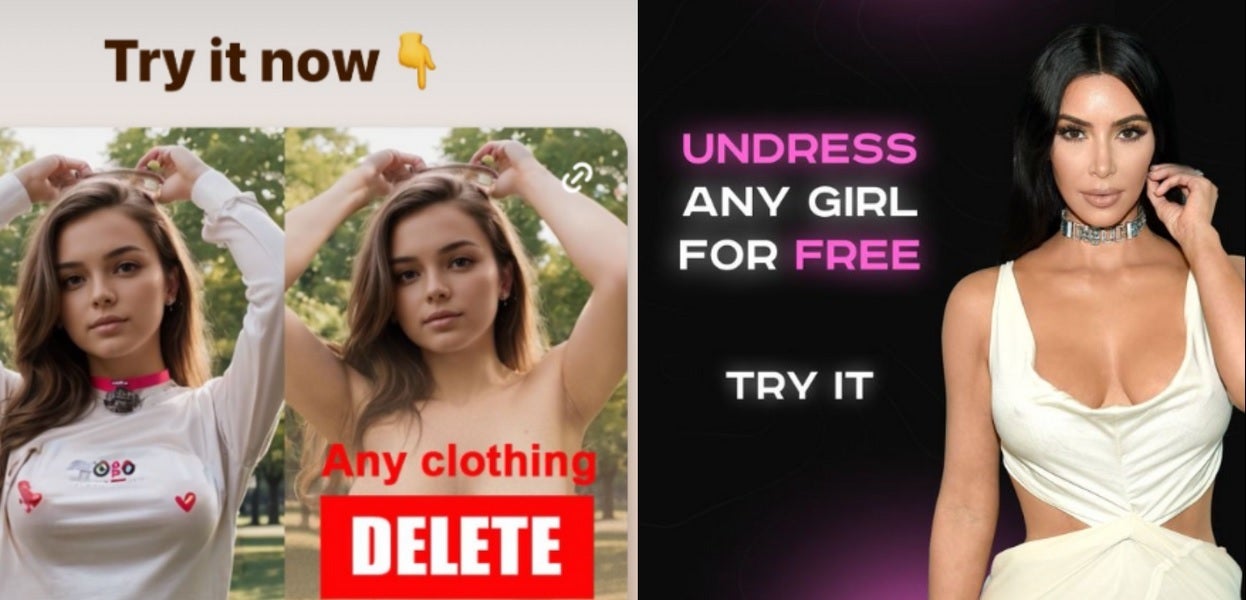

We recently wrote that Huawei was caught up in a scandal when an app on its new Pura 70 flagships allowed it to remove clothes from girls using AI. Now Apple has found itself in a similar situation. The company has reportedly removed three apps from the App Store that advertised themselves as "art generators" but promoted themselves on Instagram and adult sites, claiming they could "undress any girl for free". Some of these apps offered an AI "undressing" feature, while others performed face-swapping on adult images. While the images do not show the girl's actual nudity, the app can generate images that can be used for harassment, blackmail and invasion of privacy.

404 Media reports that Apple only removed the apps after it was provided with links to the apps and their adverts. Prior to that, the company was unable to locate such apps. Although there are reports that the apps were available from 2022 and were already advertising the "undressing" feature on adult sites. It is reported that these apps could remain on the App Store if they remove the adverts on 18+ sites.

This incident comes at a bad time for Apple as the company prepares to hold its WWDC 2024 developer conference in June, where the company will make major artificial intelligence announcements for iOS 18 and Siri. Right now, Apple needs a sterling reputation for reliability and this situation could hurt it.

Source: 404 Media