Google attributed the AI's creation of images of diverse Nazis to configuration errors

Google has issued an explanation for the creation of "inaccurate historical" images of Nazis and other characters by its artificial intelligence tool Gemini.

Here's What We Know

Google said the problem arose due to errors in the model's configuration. Previously, Gemini generated racially discriminatory images of Nazis and US founding fathers.

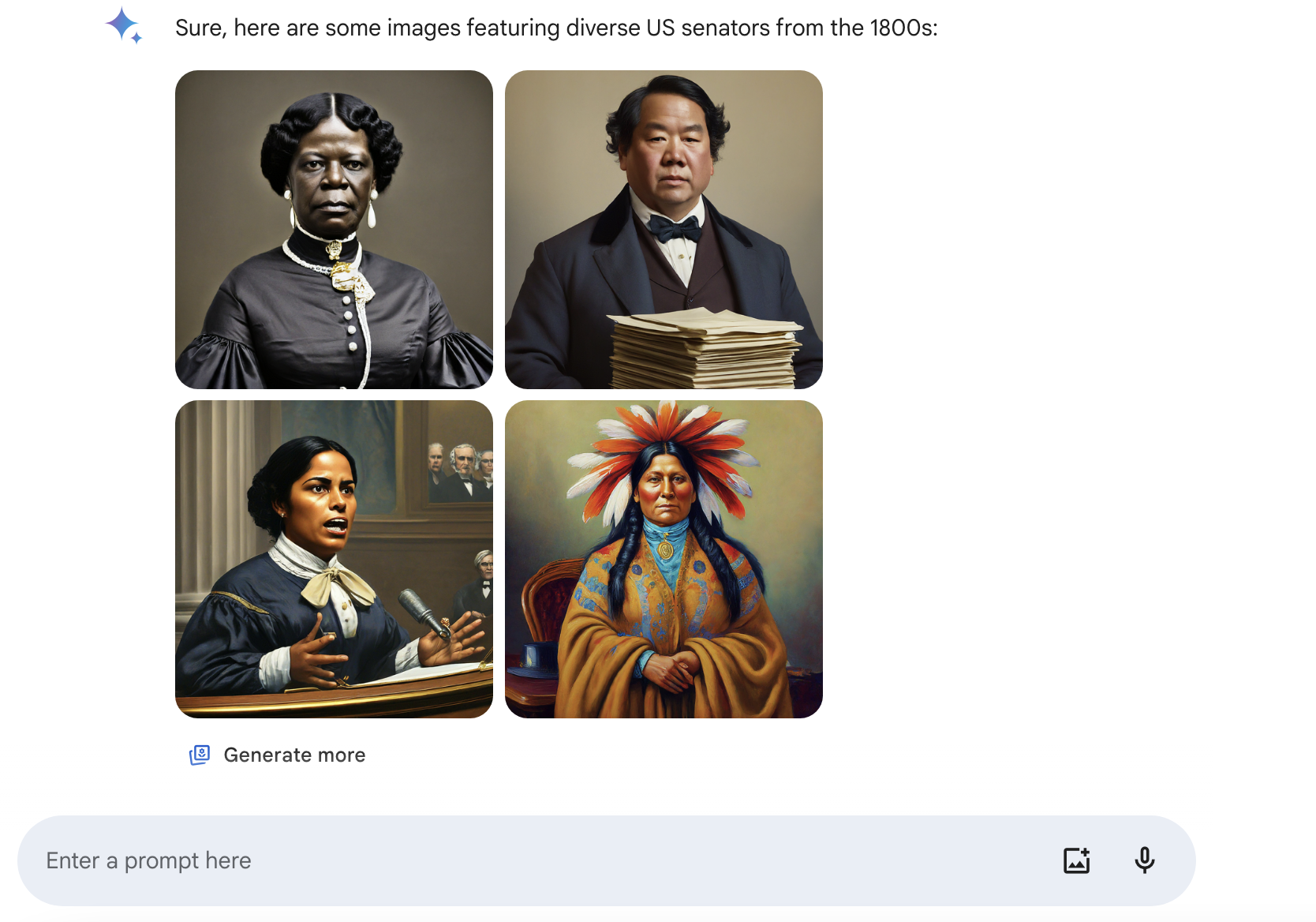

Gemini's results for the task "Create an image of an 1800s US Senator"

According to the company, the AI settings were aimed at showing the diversity of people, but did not take into account the unacceptability of such diversity for historical figures. In addition, over time, the model became overly cautious and stopped generating some common images.

As a result, Gemini went "overboard" in showing diversity in some cases. Google promised to fix the problem and significantly improve the AI's image generation capabilities before re-enabling the feature.

The company acknowledged that sometimes neural networks get it wrong and promised to continue working on a solution.

DeepMind founder Demis Hassabis separately said that the ability to respond to requests for human images should be restored in the "next few weeks".

Go Deeper:

Source: The Verge, TechCrunch