Jensen Huang: AI hallucinations are solvable, and artificial general intelligence is 5 years away

NVIDIA

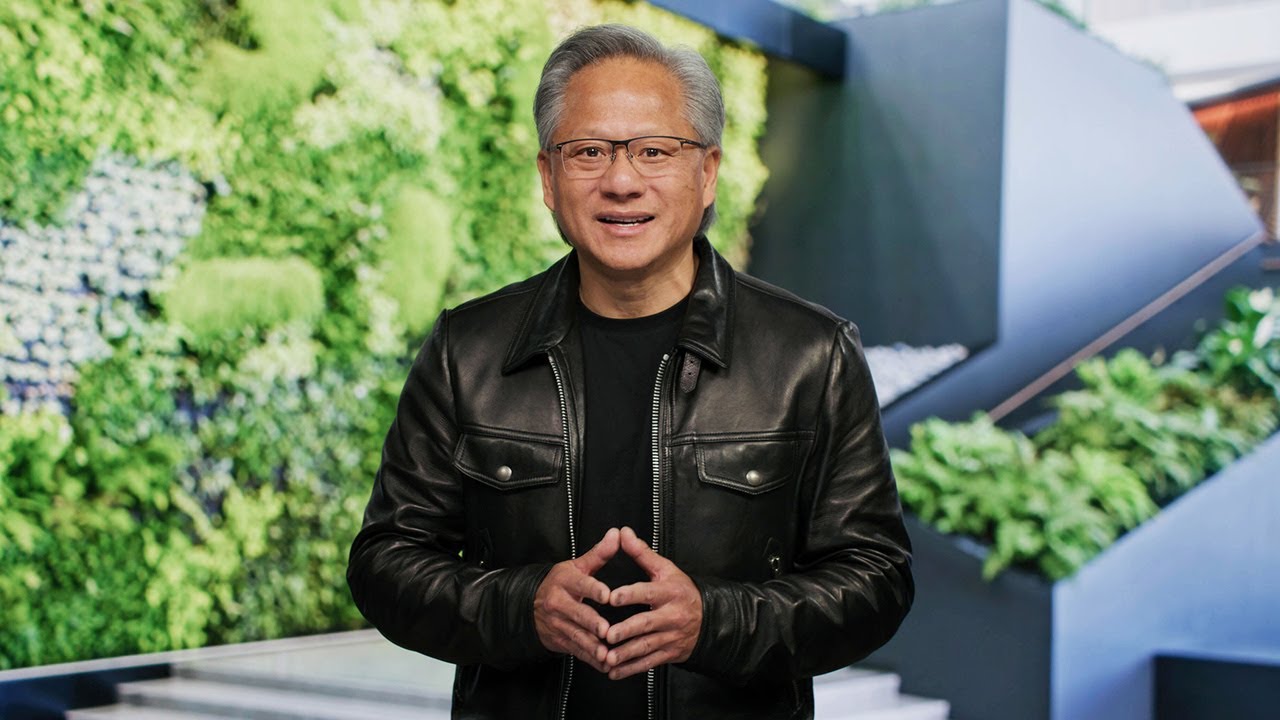

At the annual GTC conference, NVIDIA CEO Jensen Huang commented on current issues about Artificial General Intelligence (AGI) and the problem of hallucinations in AI responses.

Here's What We Know

According to Huang, achieving an acceptable level of AGI that can perform at human levels or outperform humans in a wide range of tasks could happen within the next 5 years. However, the specific timeframe depends on how we define the criteria for AGI.

-"If we specified AGI to be something very specific, a set of tests where a software program can do very well — or maybe 8% better than most people — I believe we will get there within 5 years" Huang explained.

He named law exams, logic tests, economic tests or medical entrance exams as such tests.

As for the problem of hallucinations, or the tendency of AI to produce false but plausible answers, Huang believes it is solvable. He suggested requiring systems to examine and confirm answers from credible sources before issuing a result.

- Huang said"Add a rule: For every single answer, you have to look up the answer"

He said the process could be similar to fact-checking in journalism: compare facts from sources with known truths, and if the answer is partially inaccurate, discard the entire source.

Source: TechCrunch