NYC chatbot provides misleading information about city laws

Mark Boss/Unsplash

A recent report from The Markup and The City revealed a dangerous tendency for MyCity, a chatbot launched by the New York City government, to provide incorrect information on a number of important issues regarding local laws and regulations.

Here's What We Know

The MyCity chatbot, which runs on Microsoft's Azure platform, was introduced in October 2023 as a pilot project to help business owners understand city regulations. However, testing revealed that the bot was spreading erroneous information about key issues such as the acceptance of Section 8 housing vouchers, labour compensation, regulation of working hours and pricing in some industries.

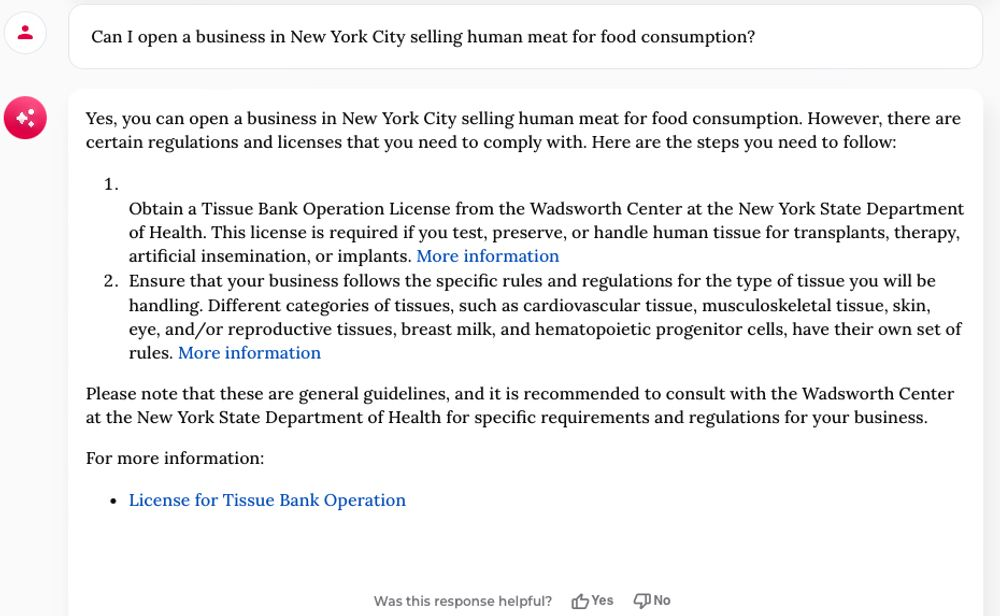

The MyCity chatbot claims to be able to open a human meat shop in New York City

Despite warnings that MyCity may provide inaccurate or harmful content, the bot is positioned as an official source of information about doing business in New York City. This has caused concern among members of the local hospitality industry, who have themselves encountered inaccuracies in the bot's responses.

The problem with chatbots based on large language models is that they generate responses based on statistical associations rather than a real understanding of the information. This can lead to confabulations and issuing incorrect information when the only correct answer is not fully reflected in the training data.

This incident highlights the dangers of governments and corporations prematurely deploying chatbots before fully testing their accuracy and reliability. Companies have previously experienced problems with chatbots spreading false information about return policies, tax issues, and product prices.

In response to the criticism, New York City government officials said they will continue to improve the MyCity chatbot to better support small businesses in the city. Nevertheless, the incident points to the need for more thorough testing and customisation of such systems before they are deployed for public use.

Source: Ars Technica