Opera updates its browser to add support for local AI models

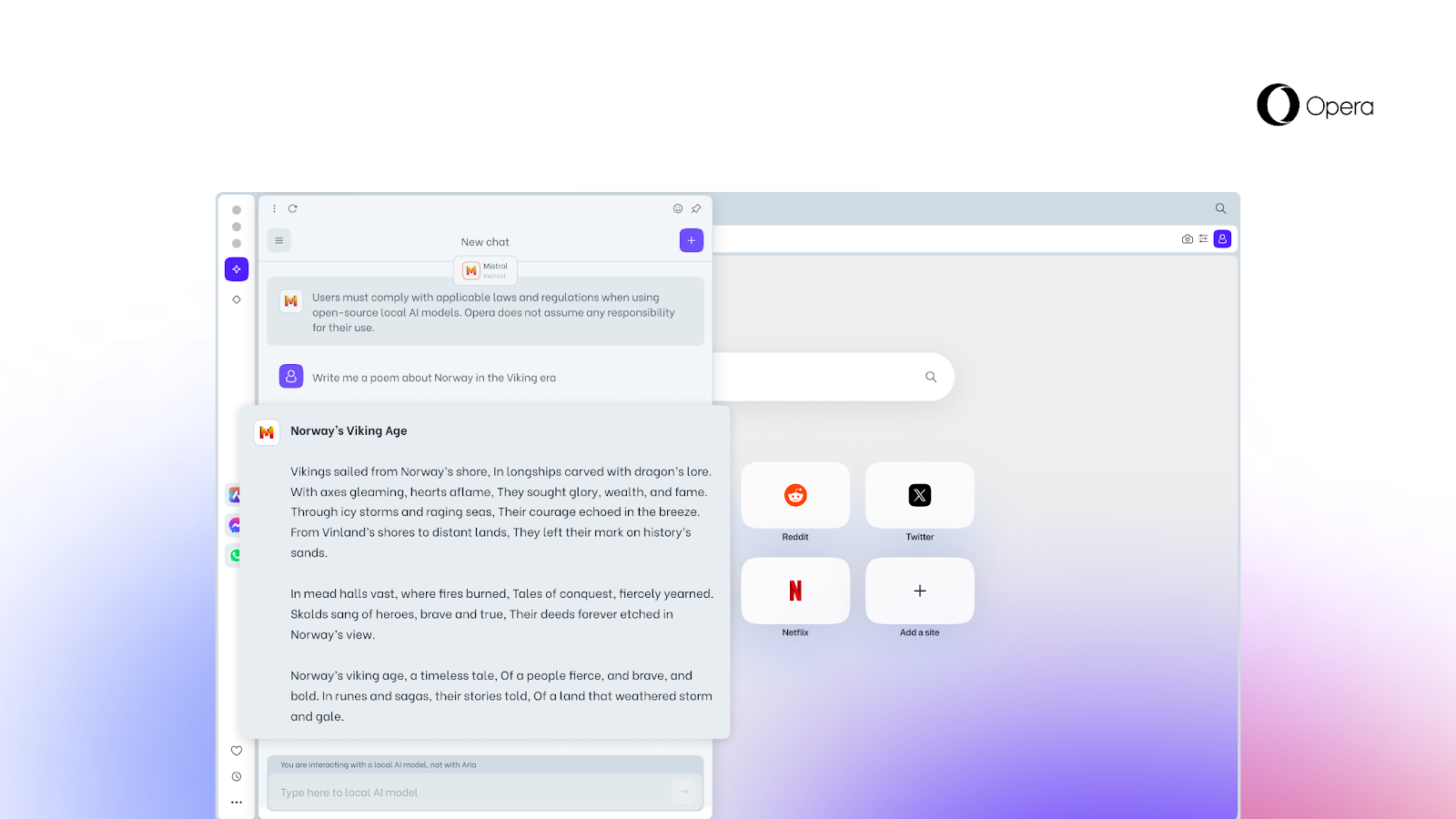

Opera, a company actively investing in artificial intelligence (AI), has unveiled a new feature that allows users to access local AI models directly from the browser.

Here's What We Know

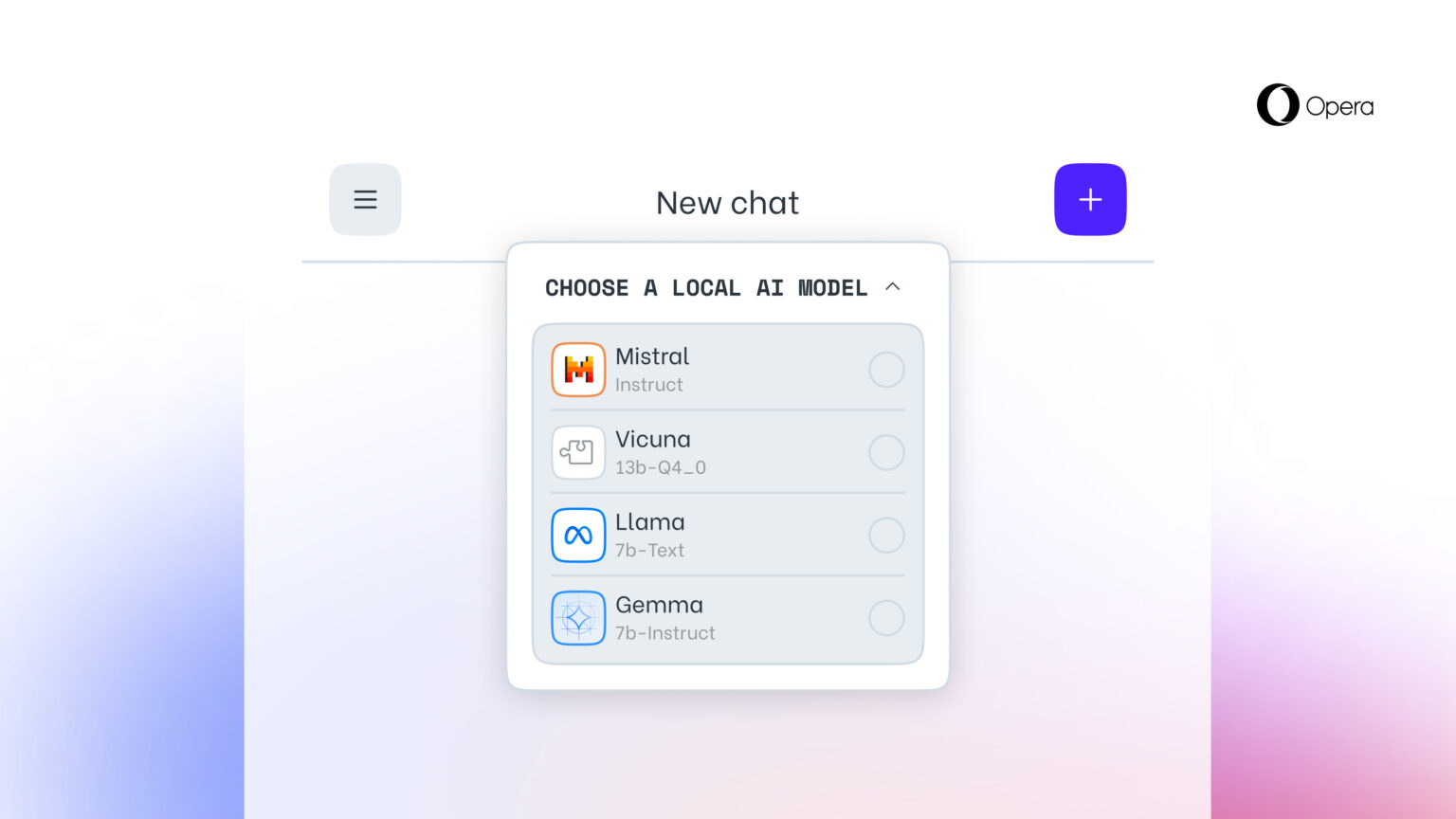

This option, which no other browser yet offers, is made possible by adding experimental support for 150 variants of local large language models (LLMs) from around 50 model families.

Local AI models are an addition to Opera's Aria AI online service, which is also available in the Opera browser on iOS and Android. Supported local LLMs include models such as Llama (Meta), Vicuna, Gemma (Google), Mixtral (Mistral AI) and many others.

The new feature is especially important for those who want to maintain privacy when browsing the web. The ability to access local LLMs from Opera means that users' data is stored locally on their device, allowing them to use generative AI without having to send information to a server.

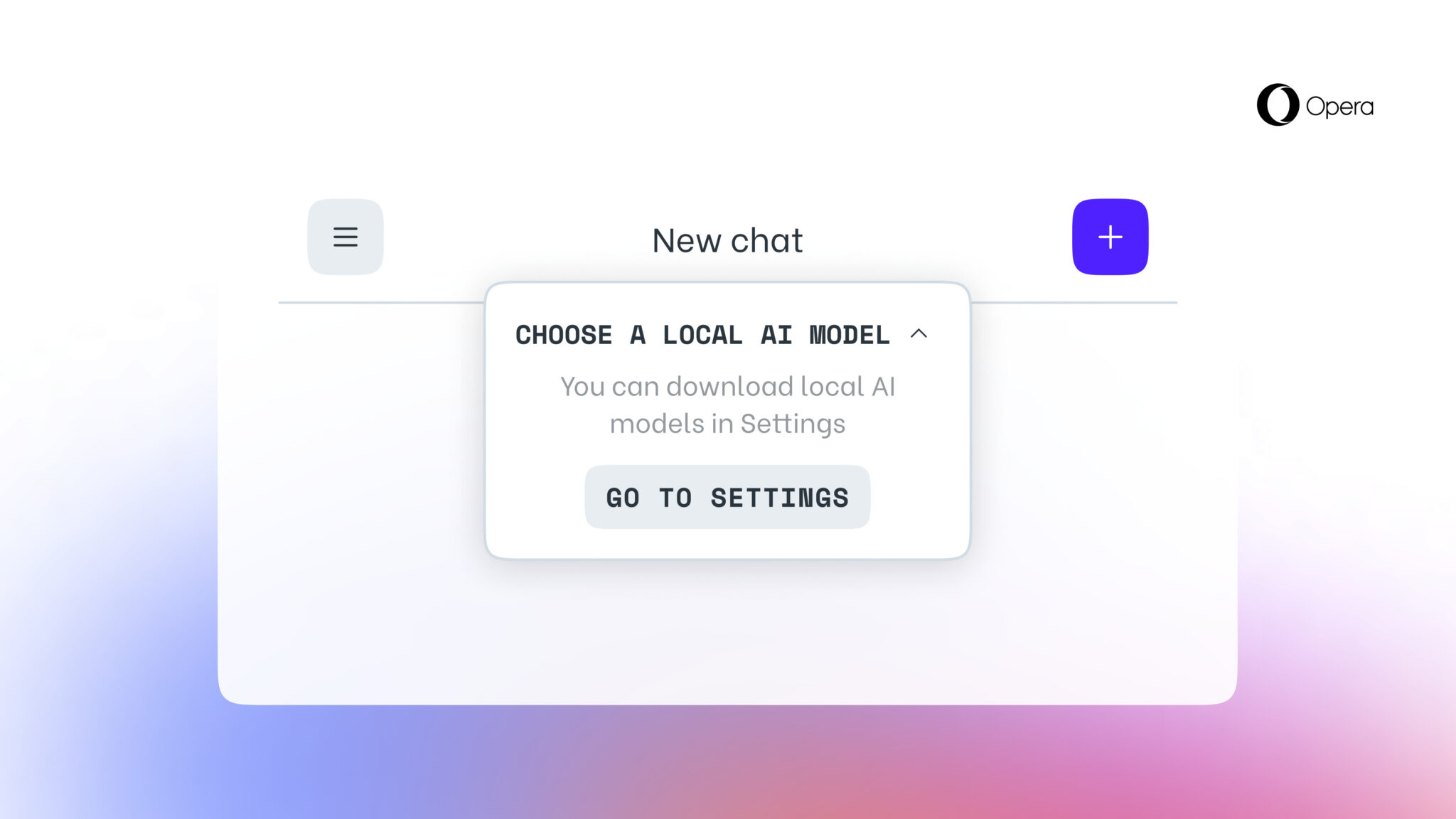

Starting today, the new feature is available to Opera One Developer users, although you'll need to upgrade to the latest version of Opera Developer and follow the step-by-step guide to activate it.

It's worth remembering that once you select a specific LLM, it will be downloaded to your device. It's also important to note that a local LLM typically requires between 2GB and 10GB of disc space for each option. Once downloaded to your device, the new LLM will be used in place of Aria, the Opera browser's built-in AI.

Source: Opera