Google Lens gets AI-based answer generation feature for visual search

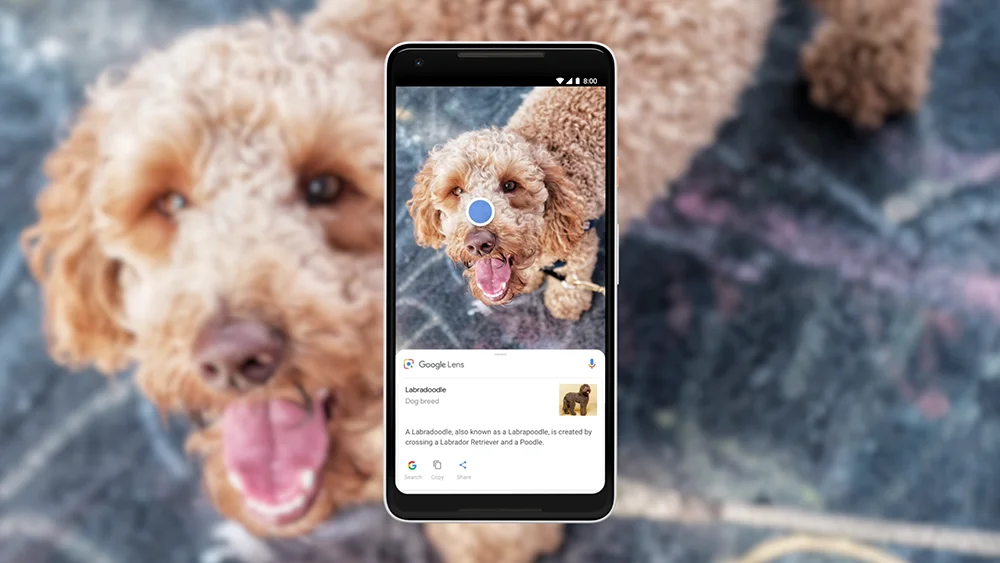

Google has announced enhanced visual search capabilities in the Google Lens app, which now includes generating answers using artificial intelligence technology.

Here's What We Know

When running a search in Lens, a user can point the camera at an object of interest, ask a question about it, and get a detailed answer generated by a neural network based on data from the web.

For example, pointing the camera at a houseplant and asking "How often should I water it?" will provide specific advice on how to care for that species, rather than just images of similar plants as before.

In addition, the new features are integrated with a "Circle to Search" gesture that allows you to highlight a desired object or area in an image.

According to Google, Lens' answer generation is based on aggregating data from a variety of online sources, including websites, shops and videos. This is different from Google's experimental Search Generative Experience service, where answers are generated by a neural network.

The new Lens feature is already available in the US in English on iOS and Android platforms.

Source: TechCrunch