Apple unveiled an AI model for editing images based on text commands

Laurenz Heymann/Unsplash.

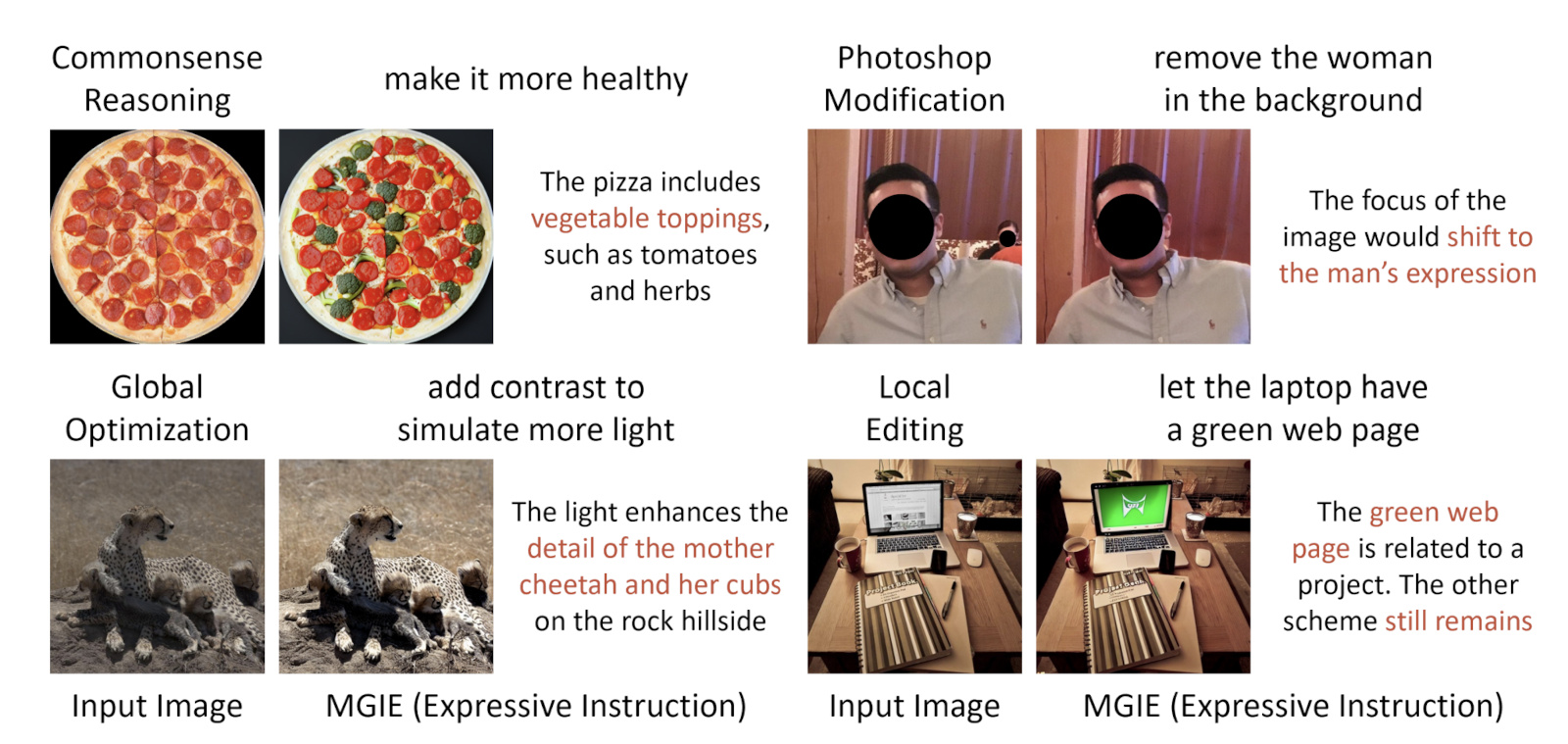

Apple, together with researchers at the University of California, has developed and published an artificial intelligence model called MLLM-Guided Image Editing (MGIE). It allows making changes to images based on text instructions in natural language.

Here's What We Know

MGIE uses the technology of multimodal models of large languages. This makes it possible to interpret short and ambiguous user commands to further edit photos. For example, a "make healthier" prompt for a pizza shot AI can understand as a need to add vegetable ingredients.

As well as making major changes to content, the model can perform basic operations like cropping, rotating, resizing and colour correcting images. The AI can also process individual regions to transform certain objects.

MGIE is available on GitHub. In addition, Apple has posted a demo version of the service on the Hugging Face resource.

It is not yet known whether the company plans to integrate the technology into its own products.

Source: Engadget