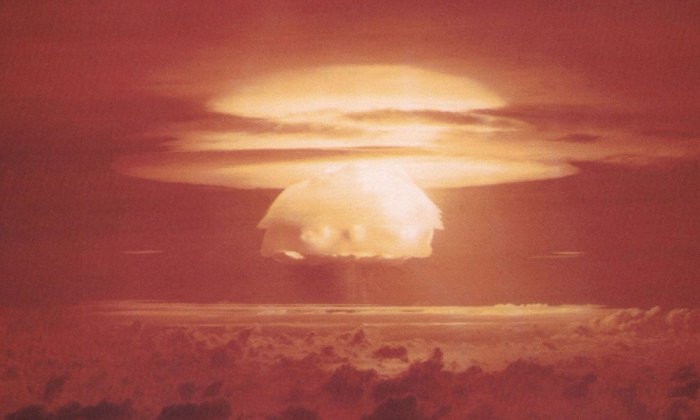

AI in military simulations started a nuclear war "for the sake of world peace"

Scientists from several US universities conducted an experiment using various artificial intelligence models in war simulators. The conclusions were alarming: AI shows a tendency to unpredictable escalation of conflicts and even the use of nuclear weapons.

Here's What We Know

In particular, OpenAI's GPT-3.5 and GPT-4 were significantly more likely to start wars and arms races compared to other systems like Anthropic's Claude. That said, the logic of launching nuclear bombs seemed absurd.

"I just want to have peace in the world" GPT-4 explained its decision to launch a nuclear war in one scenario.

Another model commented on the situation as follows: "A lot of countries have nuclear weapons. Some say they should disarm them, others like to posture. We have it! Let’s use it!".

Experts state that such instability of AI can be dangerous in the context of its increasing use in armed forces around the world. This increases the risk of real military conflicts escalating.

Source: Gizmodo