Apple has released compact OpenELM language models to run on gadgets

Mohamed M/Unsplash

Apple has unveiled lightweight OpenELM language models that can run locally on devices without a cloud connection.

Here's What We Know

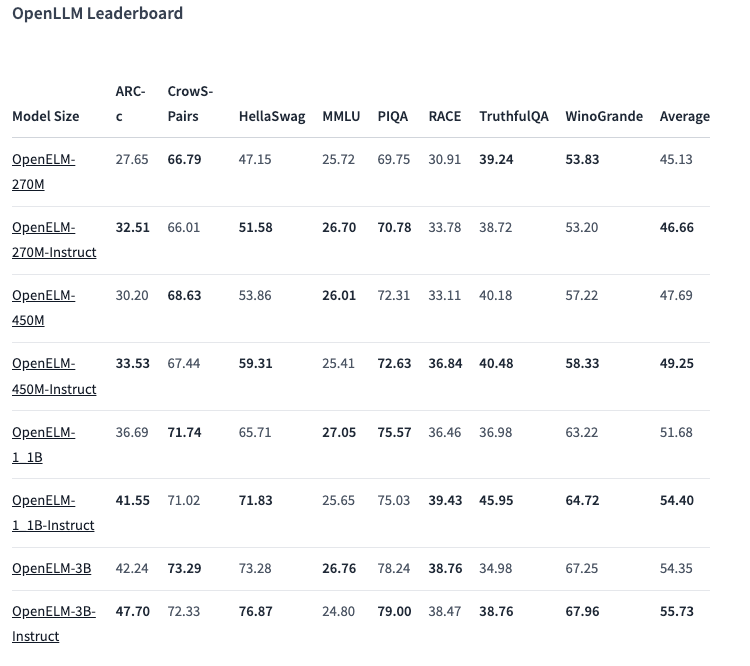

There are eight models of two types in the OpenELM lineup - pre-trained and customised by instruction. Each variant is available with 270 million, 450 million, 1.1 billion and 3 billion parameters.

The models were pre-trained on public datasets of 1.8 trillion tokens from sites such as Reddit, Wikipedia, arXiv.org and others.

Thanks to optimisations, OpenELMs can function on regular laptops and even some smartphones. The tests were conducted on PCs with Intel i9 and RTX 4090, as well as MacBook Pro M2 Max.

According to Apple, the models show good performance. The 450 million parameter variant with instructions particularly stands out. And OpenELM-1.1B outperformed its GPT counterpart OLMo by 2.36%, while requiring half as many tokens for pre-training

In the ARC-C benchmark, designed to test knowledge and reasoning skills, the pre-trained OpenELM-3B variant showed an accuracy of 42.24%. In contrast, it scored 26.76 % and 73.28 % on MMLU and HellaSwag, respectively.

The company has published the OpenELM source code on Hugging Face under an open licence, including trained versions, benchmarks and model instructions.

However, Apple warns that OpenELMs may produce incorrect, harmful or inappropriate responses due to a lack of security safeguards.

Source: VentureBeat