Twitter is testing a new concept for censoring responses to tweets

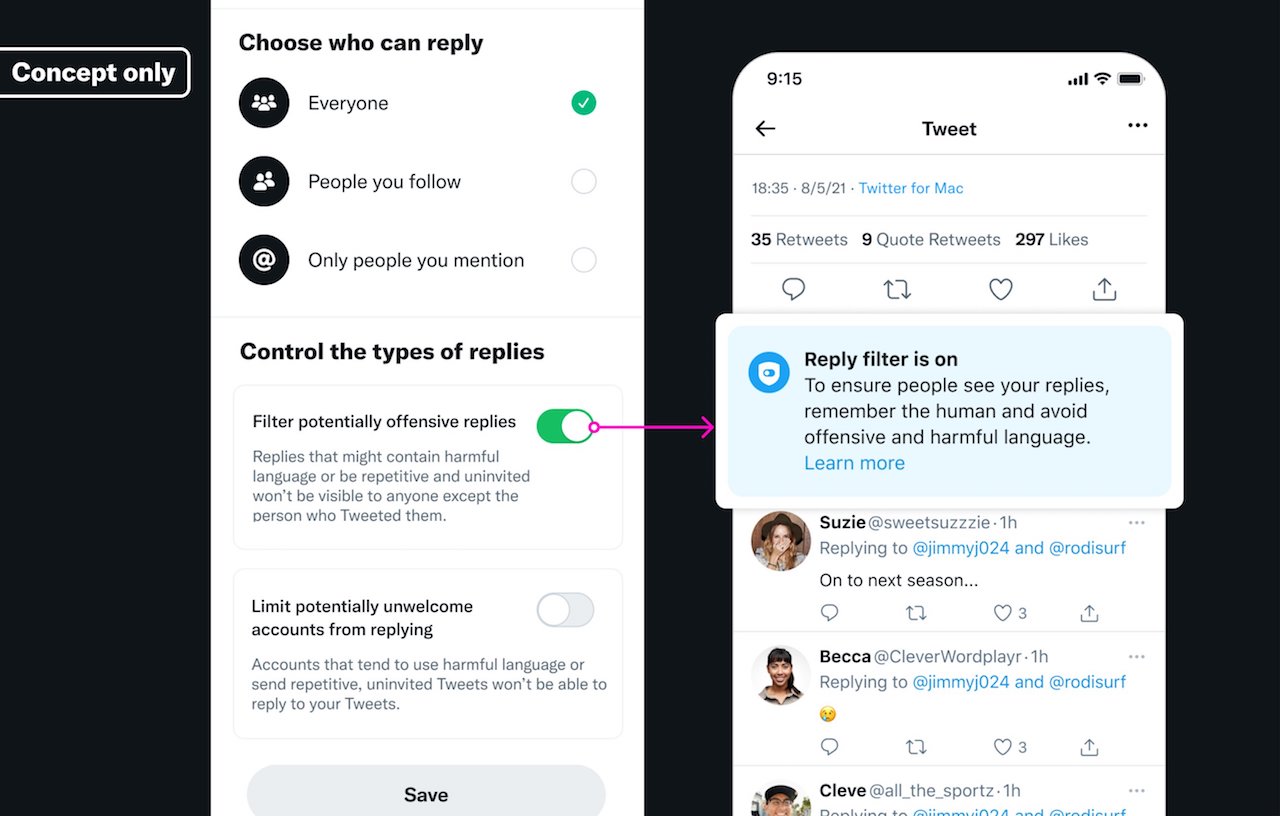

Twitter is trying to limit the number of toxic responses to tweets ("replays" on Twitter Dialect) by providing tools for users to be more proactive in preventing them. In the future, these tools may include a feature to filter potentially offensive responses, as well as a feature to limit responses from potentially unwanted accounts.

Senior Twitter product designer Paula Barcante has published a preview of these features, which are only concepts at this point to get users' opinions.

Barcante reports that Twitter will ask you if you want to enable these controls if it detects potentially harmful responses to your tweets. If the reply filter is enabled, Twitter will not show you or anyone else - other than the user who wrote the reply - the malicious tweets they posted. If you choose to filter out unwanted accounts, users who have recently broken a rule will not be able to reply to your tweets at all.

If potentially harmful or offensive replies to your Tweets are detected, we'd let you know in case you want to turn on these controls to filter or limit future unwelcome interactions.

- Paula Barcante (@paulabarcante) September 24, 2021

You would also be able to access these controls in your settings. pic.twitter.com/ok5qXOf33Z

Since the process will be automated, Barcante acknowledges that it may not always be accurate and may end up filtering out even relevant, non-compliant responses. That's why the company is also exploring the possibility of allowing filtered tweets and limited accounts to be viewed. Of course, the final version of the tools could differ significantly from these preliminary versions, should they be released.

When asked whether the inclusion of a response filter will filter out all tweets from an account or just the tweet the site deemed potentially offensive, Barcante has not yet responded. She said that what she showed was only an "early concept that requires testing and iteration," and that Twitter would provide more details if it decided to launch the tools.

Source: @paulabarcante